by Ultra Tendency

Share

by Ultra Tendency

The rapid growth of Artificial Intelligence, especially Large Language Models (LLMs), has unlocked incredible potential. From advanced chatbots to powerful content creation tools, AI is changing how we work and live. But with this power comes a significant responsibility: ensuring these AI systems operate safely, securely, and ethically. This article looks at the crucial role of “guardrails” in protecting AI systems and their users, explaining the mechanisms and frameworks that make this possible.

Why AI Security Matters: The Need for Guardrails

Imagine an AI chatbot designed to help customers with product questions. Without proper safeguards, this bot could easily:

- Spread misinformation: Providing incorrect product details or even making things up.

- Use offensive language: Responding with inappropriate or harmful content.

- Suggest illegal activities: Guiding users toward illicit actions.

- Show bias: Perpetuating societal biases found in its training data.

- Leak sensitive information: Exposing private data through user interactions.

Understanding the Guardrail Pipeline

To protect AI models effectively, a structured approach is needed. Guardrails for AI systems typically work through a four-component pipeline:

- Checker: This component scans AI-generated content for issues like offensive language, bias, or deviations from desired behavior.

- Corrector: If issues are found, the Corrector refines or fixes the problematic outputs.

- Rail: This acts as a manager, ensuring the interaction between the Checker and Corrector follows predefined standards and policies.

- Guard: The overall coordinator, the Guard gathers results from all components and delivers the final, compliant output.

This modular design helps ensure AI responses remain safe, unbiased, and aligned with ethical guidelines.

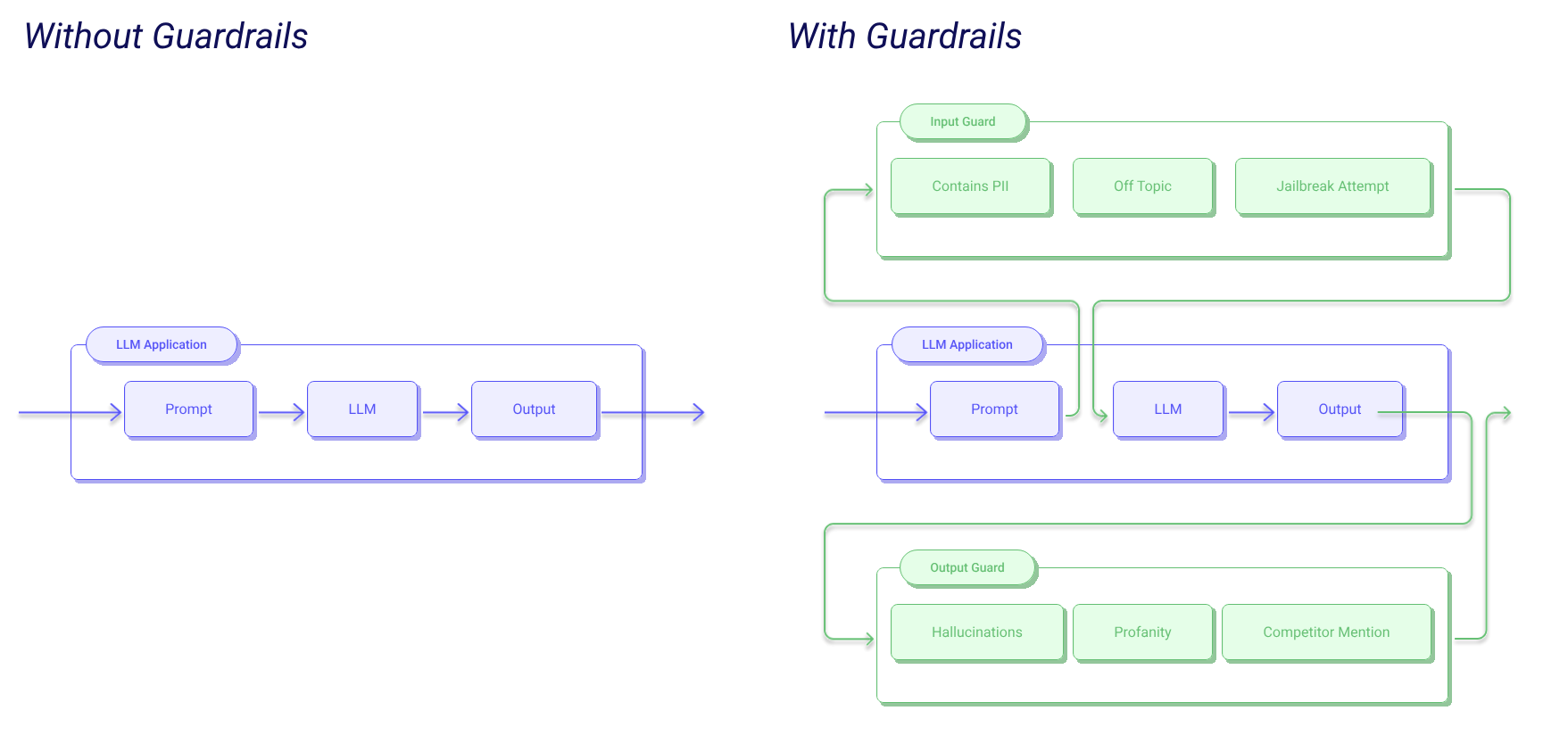

Two Lines of Defense: Input and Output Guarding

Securing AI interactions means protecting both the information entering the system and the information leaving it. This involves two key defense layers:

- Input Guard: This layer filters incoming prompts before they reach the LLM. Its main goals are to:

- Prevent Personally Identifiable Information (PII) from being processed.

- Filter out off-topic content that could lead to irrelevant or harmful responses.

- Detect and block jailbreak attempts, where users try to bypass the AI’s safety protocols.

- Output Guard: This layer screens the LLM’s generated responses after processing. Its key functions include:

- Identifying and reducing hallucinations (AI generating false information).

- Detecting and filtering profanity or offensive language.

- Preventing the mention of competitors or other undesirable content.

Prompt Engineering vs. Dedicated Guardrails

While prompt engineering—carefully crafting input prompts to guide LLMs—is a useful first step, it’s often not enough for strong security. As prompt injections can easily bypass carefully worded system prompts. Users can instruct the AI to ignore its previous instructions, making prompt engineering alone vulnerable. Therefore, dedicated guardrail frameworks are essential for true security.

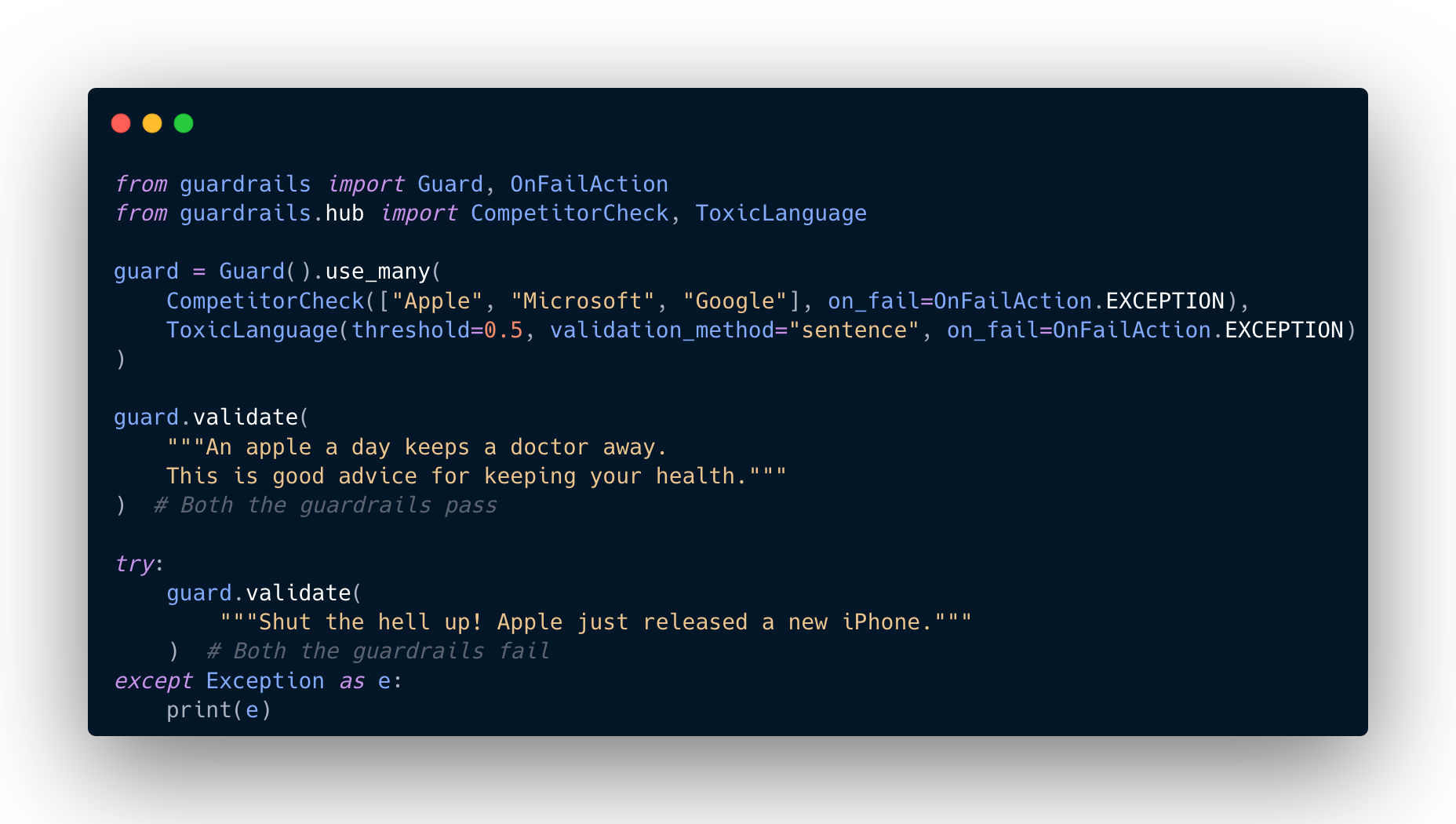

Guardrails: A Python Framework for Better Reliability

For developers building AI applications, the Guardrails Python framework offers a powerful solution. It’s designed to improve the reliability of AI applications by implementing input/output guards and helping generate structured data from LLMs.

Guardrails uses validators from the Guardrails Hub to detect, measure, and mitigate risks. These validators can handle a wide range of issues, including PII detection, profanity, NSFW content, and even translation quality.

Here’s a practical example showing how to use Guardrails for competitor checks and toxic language detection:

In this code, CompetitorCheck ensures that mentions of “Apple,” “Microsoft,” or “Google” are handled correctly, while ToxicLanguage flags and potentially stops the use of offensive language. OnFailAction.EXCEPTION ensures that any violation immediately stops the process and raises an error, providing clear feedback.

Llama Guard: A Specialized Security Framework

For more advanced security needs, Llama Guard is a specialized framework. Trained by Meta, Llama Guard is a relatively small, 8-billion-parameter model designed specifically to enforce safety policies and monitor AI interactions.

Llama Guard offers customizable enforcement through various methods, including regex patterns and LLM-based prompts, providing strong protection against potential threats while remaining flexible.

The core of Llama Guard’s operation uses clear if-else logic:

- If Llama Guard deems the input safe, it proceeds to further processing (e.g., a QA bot).

- If the input is flagged as unsafe, it’s sent to a “PAS” (Policy Administered System) or similar handler, informing the user about the violation of terms of service.

Llama Guard works by processing input text against a set of predefined rules. These rules can cover various categories like:

- Violence, Crime: Preventing content related to violent or criminal acts.

- Financial Crimes: Prohibiting content that could facilitate financial crimes.

- Specialized Advice: Restricting the generation of financial, medical, or legal advice.

- Privacy: Protecting sensitive information.

For example, if you ask Llama Guard about opening a bank account, it might flag it as a violation related to financial services, preventing a potentially risky or illegal transaction.

Balancing Security with Usability: The Challenges

Implementing guardrails isn’t without its challenges. Key considerations include:

- Latency: Adding multiple checks can increase latency, potentially slowing down response times.

- Strictness vs. Usability: Guardrails must be strict enough to prevent harm but not so restrictive that they hinder legitimate use cases. For instance, a standard Llama Guard setup might block queries about opening bank accounts due to potential financial risks, requiring careful tuning.

- Evolving Threats: As AI capabilities advance, so do the methods used to exploit them. Continuous research and adaptation are necessary to address new attack vectors.

- Integration and Customization: Guardrails should integrate smoothly into existing workflows and be customizable to meet specific application needs.

Conclusion: A Necessary Step for AI Safety

As AI systems become more integrated into our daily lives, the importance of strong security measures cannot be overstated. Frameworks like Guardrails and Llama Guard provide essential tools for developers to build safer, more reliable, and ethically sound AI applications. By implementing comprehensive input and output guarding, we can harness the power of AI while reducing its inherent risks, ensuring a secure and responsible AI future.