by Ultra Tendency

Share

by Ultra Tendency

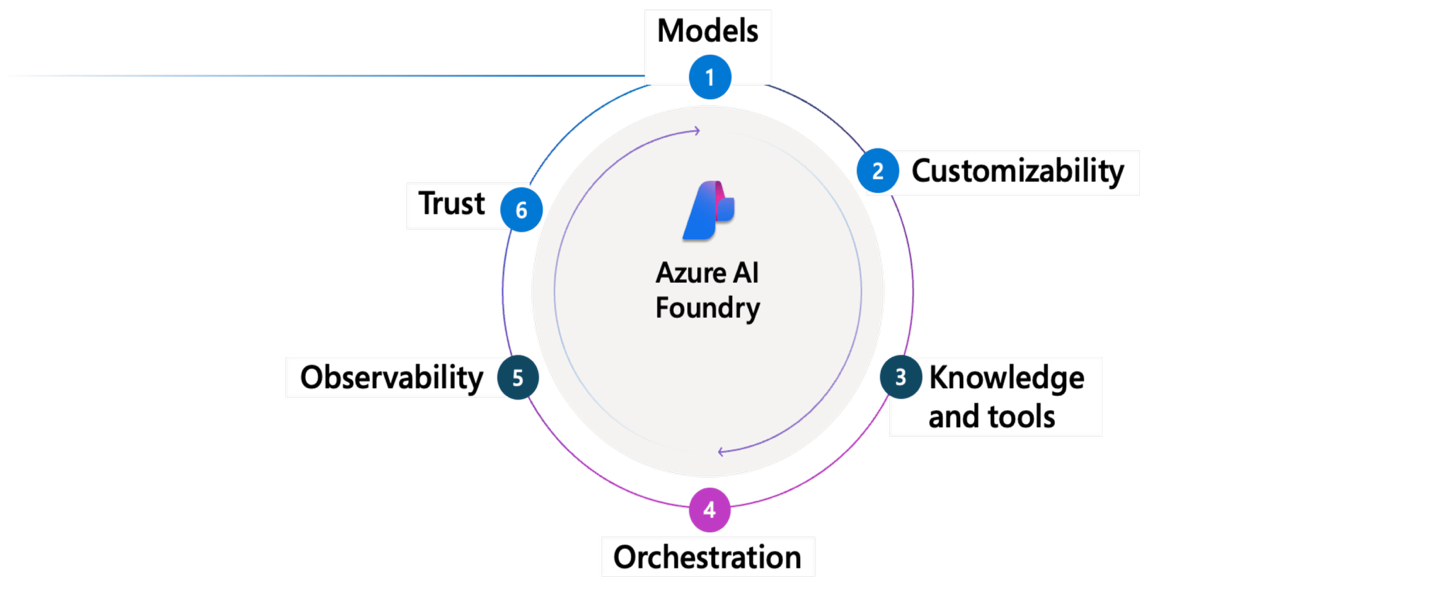

The AI landscape is evolving at breakneck speed, and frankly, keeping up with the latest tools can feel overwhelming. But here’s the thing—Microsoft’s Azure AI Foundry has emerged as something genuinely different. After diving deep into this platform, I’ve come to see it as more than just another AI service. It’s actually reshaping how we think about building enterprise-grade AI solutions.

What Makes Azure AI Foundry Special?

Think of Azure AI Foundry as Microsoft’s answer to a common enterprise problem: how do you build sophisticated AI applications when your team doesn’t necessarily have PhD-level expertise in machine learning? As Fadi Wannes put it during a recent presentation, “Azure AI Foundry is like a Microsoft enterprise grade platform for testing and deploying AI and ML solutions. It offers a full suite of tools and services for pre-built models. And if you want to bring your own model, it works on that too, enabling organizations to build scalable, secure AI applications without requiring deep in-house AI expertise.”

What struck me most is how the platform handles the entire lifecycle—from initial model selection through deployment and ongoing management. It’s not just another API service; it’s a comprehensive environment that takes the complexity out of enterprise AI development.

The Architecture That Actually Makes Sense

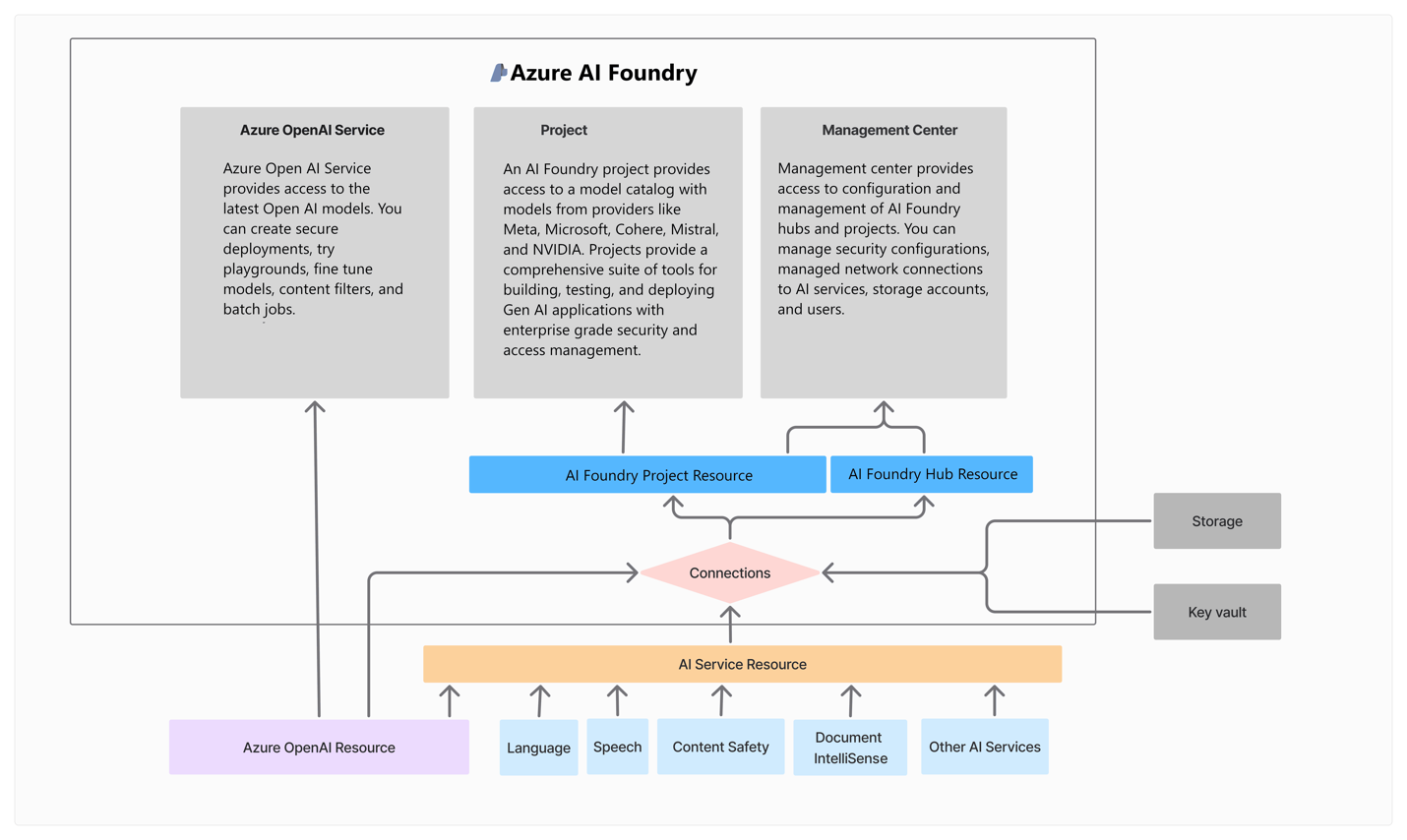

When I first explored Azure AI Foundry, I was impressed by how thoughtfully the architecture is designed. At its heart sits the Azure OpenAI Service, which gives you secure access to the latest models like GPT-4 and GPT-4 Turbo. But what makes this interesting isn’t just the models themselves—it’s how they’re integrated into a broader ecosystem.

The platform organizes everything around Projects, which serve as your development environment. Each project comes with access to what Microsoft calls the Model Catalog, and this is where things get exciting. You’re not limited to OpenAI models; you can choose from offerings by Microsoft, Mistral, Meta’s Llama family, DeepSeek, and others. As Wannes explained, “It provides a variety of models that we can use from Microsoft, Mistral, Meta Llama models, DeepSeek, and all of that. So it’s simple and plug-and-play to use these models inside AI Foundry.”

The Management Center handles all the administrative complexity—security configurations, user access, cost monitoring, and those crucial details like model parameters and content safety policies. It’s the kind of centralized control that enterprise teams actually need but rarely get in a user-friendly package.

Integration That Just Works

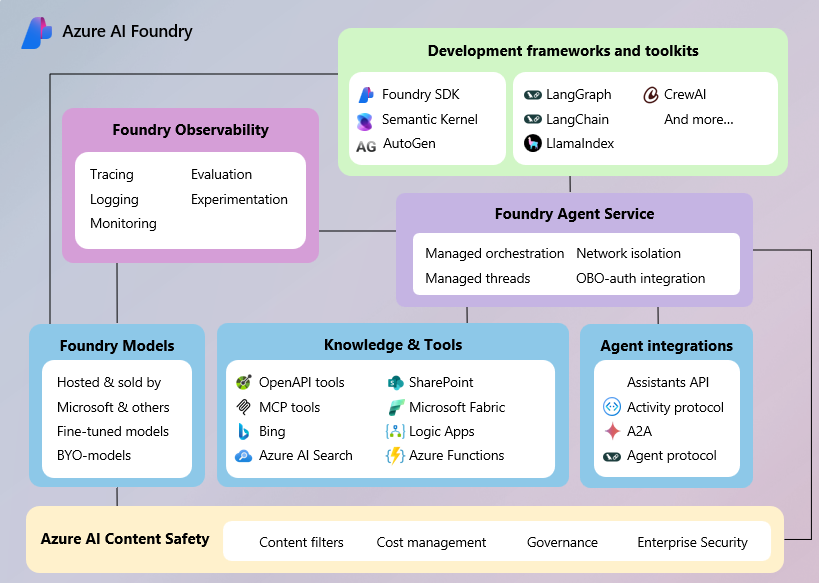

One of the most refreshing aspects of Azure AI Foundry is how naturally it integrates with tools you’re probably already using. Azure AI Search handles the heavy lifting for information retrieval, while Azure AI Content Safety keeps your applications compliant with corporate policies. If you’re building conversational AI, Copilot Studio provides a low-code approach that lets you create sophisticated interfaces without starting from scratch.

For developers, the platform plays nicely with Visual Studio and GitHub, supporting proper CI/CD workflows. The Python SDK is particularly well-designed, giving you programmatic access to everything from models to deployment endpoints. And if you’re already invested in frameworks like LangChain or LlamaIndex, you’ll find that Azure AI Foundry supports them natively.

Beyond Simple Model Deployment

Here’s where Azure AI Foundry really differentiates itself: it’s not just about deploying a model and hoping for the best. The platform gives you several sophisticated approaches to customization.

Fine-tuning lets you take a general-purpose model and specialize it for your specific domain or use case. This isn’t just tweaking parameters—you’re actually training the model on your curated datasets to improve accuracy and relevance for your particular context.

Then there’s Retrieval-Augmented Generation, or RAG, which has become essential for many enterprise applications. Instead of relying solely on the model’s training data, RAG connects your AI to live knowledge bases, dramatically improving accuracy for question-answering systems and customer support applications.

Prompt engineering might sound simple, but it’s actually an art form. The ability to shape model behavior through carefully crafted prompts gives you remarkable control over output format and detail without requiring model retraining.

The Rise of Agentic AI

Perhaps the most exciting development in Azure AI Foundry is its focus on agentic AI. This isn’t just about getting better responses from language models—it’s about creating intelligent agents that can perform complex tasks autonomously.

As Wannes described it, “Right now, we are in the probabilistic response, which is like we have an agent to create an agent. We’ll use a model that is trained. We give it some instructions and some tools, and from this input, it will give us suggestions about what it will do. So it can do retrieval, it can index our data, and it will have memory about our questions and our information, and it will trigger actions.”

These agents combine language understanding with external tool integration, memory capabilities, and orchestration logic. But what’s really powerful is the platform’s support for multi-agent systems, where multiple specialized agents collaborate to handle complex workflows. Tools like LangGraph and Semantic Kernel make this orchestration manageable rather than overwhelming.

Solving Real Enterprise Challenges

Having worked with AI implementations in enterprise environments, I appreciate how Azure AI Foundry addresses practical challenges that often get overlooked in academic discussions.

Model sprawl is a real problem—organizations end up with dozens of different models deployed across various systems with no central visibility or governance. Azure AI Foundry’s centralized model catalog and management capabilities provide the oversight that enterprise teams desperately need.

Safety and compliance aren’t afterthoughts here. The platform includes comprehensive monitoring, automated policy enforcement, and content filtering that integrates with Azure Active Directory and role-based access controls. System Message Control and Prompt Shielding ensure that AI responses stay within acceptable boundaries, while Azure AI Content Safety provides real-time monitoring.

The observability features deserve special mention. You get detailed tracing of model interactions, performance metrics, and quality assessments that help you understand not just what your AI is doing, but how well it’s doing it.

Deployment Options That Scale

When it comes to deployment, Azure AI Foundry offers flexibility that matches how organizations actually work. You can choose standard endpoints for traditional deployments, serverless endpoints for cost optimization, or managed compute when you need Azure to handle the infrastructure complexity.

The integration with Azure Developer CLI, GitHub Actions, and Azure SDKs creates deployment workflows that feel natural rather than forced. You’re not fighting the platform to get your models into production—you’re working with it.

Understanding the Service Model Sweet Spot

Azure AI Foundry positions itself as a Platform-as-a-Service offering, which turns out to be the sweet spot for many organizations. If you need maximum control and don’t mind managing infrastructure, pure IaaS gives you that flexibility. If you want the absolute fastest deployment with minimal technical requirements, SaaS solutions like Copilot Studio offer drag-and-drop interfaces.

But most enterprise teams want something in between—managed services that reduce complexity while still allowing meaningful customization. That’s exactly what Azure AI Foundry delivers.

Looking Toward the Future

After spending time with this platform, I find myself agreeing with the sentiment expressed by the experts I’ve spoken with. As Fadi Wannes put it, “I think it is 100% the future.” Domingo Muñiz Daza echoed this: “I think that the AI tool we are building with tenancy on the scale will be the future.”

The shift toward agent-based systems and multi-agent collaboration represents a fundamental change in how we think about AI applications. Instead of static models that respond to queries, we’re moving toward intelligent systems that can reason, plan, and execute complex tasks autonomously.

Azure AI Foundry provides the infrastructure and tooling to navigate this transition successfully. The platform’s commitment to continuous improvement, with regular feature previews and active community engagement, suggests that Microsoft understands the responsibility that comes with building foundational AI infrastructure.

For organizations looking to move beyond experimental AI projects toward production-scale solutions, Azure AI Foundry offers a compelling path forward. It’s not just about the technology—it’s about having a platform that grows with your AI maturity and supports the journey from initial experimentation to enterprise-wide deployment.

The future of AI development is collaborative, scalable, and increasingly autonomous. Azure AI Foundry is positioning itself to be the platform that makes that future accessible to organizations ready to embrace it.