by Ultra Tendency

Share

by Ultra Tendency

After spending the better part of five years building and maintaining traditional hub-and-spoke architectures in Azure, I’ll admit I was skeptical when Microsoft started pushing Virtual WAN. Another managed service promising to solve all our problems? I’d heard that before. But after working with vWAN for the past eighteen months across several enterprise deployments, I can honestly say it’s changed how I think about cloud networking.

The breaking point for me came during a particularly painful network expansion for a financial services client. We were hitting the dreaded VNet peering limits, manual route configurations were taking weeks to implement correctly, and frankly, the operational overhead was consuming far too much of our team’s bandwidth. That’s when I decided to give Virtual WAN a serious look.

The Traditional Approach That Served Us Well (Until It Didn’t)

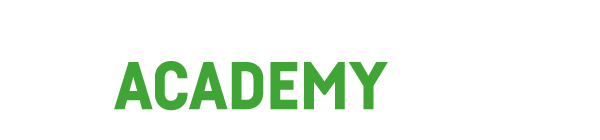

For years, the classic hub-and-spoke pattern was our go-to architecture. It made sense—centralize your shared services in a hub VNet, connect your workload VNets as spokes, and route everything through the middle. We’d typically deploy something like this:

Hub VNet: 10.0.0.0/16

├── AzureFirewallSubnet: 10.0.1.0/26

├── GatewaySubnet: 10.0.2.0/27

└── SharedServicesSubnet: 10.0.3.0/24

Spoke VNets:

├── Production-VNet: 10.1.0.0/16

├── Development-VNet: 10.2.0.0/16

└── DMZ-VNet: 10.3.0.0/16

This approach worked beautifully for smaller deployments. The architecture was straightforward, costs were predictable, and most network engineers could wrap their heads around it without much trouble. But here’s the thing—it doesn’t scale gracefully.

What really caught us off guard was how quickly we’d hit Azure Resource Manager’s VNet peering limits. Sure, 500 peerings per VNet sounds like a lot until you’re supporting a large enterprise with dozens of business units, each with their own development, staging, and production environments. And don’t get me started on the manual work involved in adding each new spoke. Every new connection meant updating route tables, configuring security rules, and testing connectivity—all manual processes that introduced opportunities for human error.

The costs started adding up too, especially when we needed cross-region connectivity. Inter-region VNet peering charges can get expensive fast when you’re moving significant amounts of data between regions.

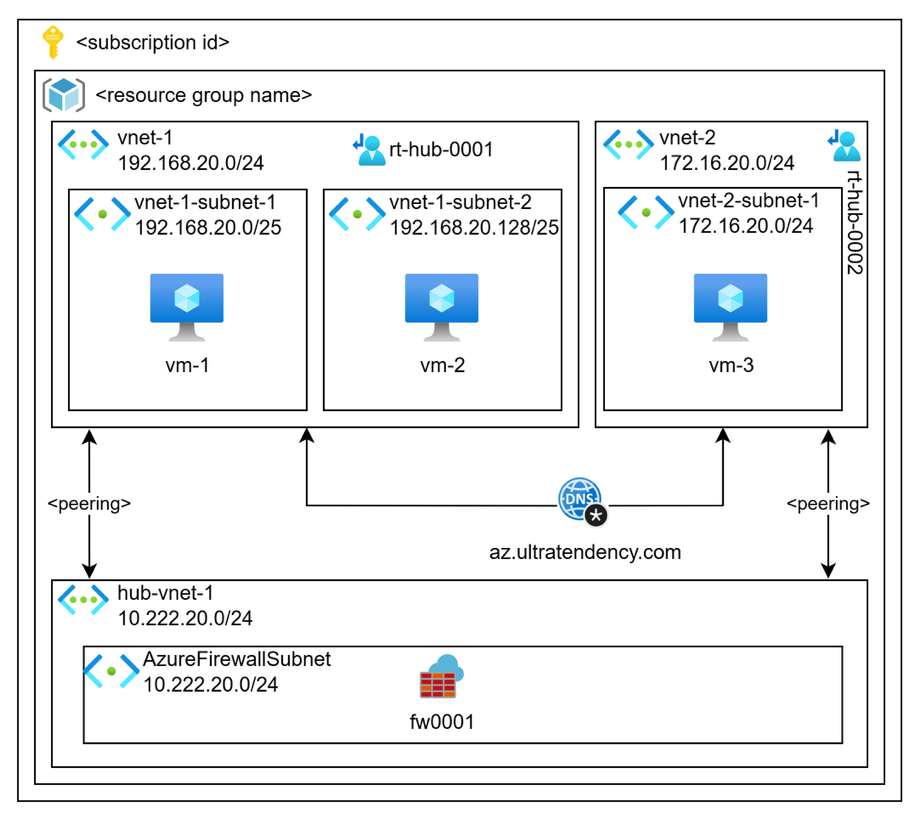

What Virtual WAN Actually Changes

Virtual WAN fundamentally shifts how you think about network topology. Instead of managing individual VNet peerings and route tables, you're working with managed hubs that handle the complexity for you. After implementing it across several client environments, what struck me most was how much operational overhead simply disappeared.

The architecture looks deceptively similar to traditional hub-and-spoke, but the underlying mechanics are completely different. Here's a typical vWAN setup I deployed recently:

Virtual WAN: Global-Enterprise-vWAN

Hub 1 (East US):

Address Space: 10.100.0.0/23

Connected VNets:

– Production-East: 10.1.0.0/16

– Development-East: 10.2.0.0/16

Security: Azure Firewall

Gateways: S2S VPN, ExpressRoute

Hub 2 (West Europe):

Address Space: 10.101.0.0/23

Connected VNets:

– Production-West: 10.11.0.0/16

– Development-West: 10.12.0.0/16

Security: Azure Firewall

Gateways: S2S VPN, ExpressRoute

The magic happens in how these hubs communicate with each other and manage routing. There's no manual peering configuration, no custom route tables to maintain for basic connectivity, and the hubs automatically handle optimal path selection between regions.

One thing that really impressed me was the routing intelligence. In traditional architectures, if you wanted traffic from a spoke in one region to reach a spoke in another region, it would traverse your hub infrastructure twice—once to reach the first hub, then across the inter-hub connection, then through the destination hub. Virtual WAN optimizes these paths automatically, often finding more direct routes that improve performance and reduce costs.

The Security Story Gets Better Too

Security integration was another area where vWAN surprised me. In traditional hub-and-spoke deployments, we'd spend considerable effort ensuring Azure Firewall was properly positioned in the traffic flow and that all our route tables were configured to force traffic through security controls. Miss a route table update, and you've got traffic bypassing your firewall.

With vWAN, the security integration feels much more natural. When you deploy Azure Firewall in a vWAN hub, it automatically becomes part of the routing fabric. Traffic flows through security controls by design, not by careful route table configuration. Here's how I typically configure the security policies:

Route Table: Production-Routes

├── Associated VNets: Production-East, Production-West

├── Propagated Routes: ExpressRoute, Production branches

└── Default Route: 0.0.0.0/0 → Azure Firewall

Route Table: Development-Routes

├── Associated VNets: Development-East, Development-West

├── Propagated Routes: Development branches only

└── Internet Access: Direct (cost optimization)

What's particularly nice is how this works across regions. Security policies applied in one hub automatically extend to inter-hub communication, giving you consistent security posture without duplicating configuration.

The Identity Integration That Actually Works

Here's where things get interesting for organizations with remote workers. Traditional VPN setups often involve certificate management nightmares or separate authentication systems that nobody wants to maintain. Virtual WAN's integration with Microsoft Entra ID changed this completely.

After working with this setup across multiple client deployments, the user experience is genuinely smooth. Users authenticate with their existing corporate credentials, conditional access policies apply automatically, and there's no separate VPN client configuration to manage. The PowerShell setup is refreshingly straightforward:

$vpnClientConfig = @

But what really sold me was watching end users actually use it. No certificate installation struggles, no separate passwords to remember, and IT doesn't have to manage yet another identity system.

When the Numbers Don't Add Up (And When They Do)

Let's talk about cost, because that's usually where these conversations get uncomfortable. Virtual WAN isn't cheap—you're looking at roughly $0.25 per hour per hub plus data processing charges. For smaller environments, this can actually cost more than traditional hub-and-spoke architectures.

But here's what I learned after analyzing costs across several deployments: the break-even point comes faster than you might expect. We eliminated VPN gateway costs in spoke VNets, reduced cross-region data transfer charges through optimized routing, and most importantly, dramatically reduced operational overhead. When you factor in the engineering time saved on manual configurations and troubleshooting, the business case often makes itself.

That said, I wouldn't recommend vWAN for every scenario. If you're running a simple, single-region deployment with fewer than 50 spoke VNets and tight cost constraints, traditional hub-and-spoke probably still makes more sense. The complexity and cost overhead of vWAN won't pay dividends in simpler environments.

The Migration Reality

Moving from traditional hub-and-spoke to vWAN isn't something you do over a weekend. After managing several of these transitions, I've settled on a phased approach that minimizes disruption while allowing for proper testing.

The first phase involves extensive planning and assessment. You need to inventory your existing connectivity patterns, understand your current routing requirements, and calculate the real ROI based on operational savings. This isn't just a technology decision—it's an operational one.

Phase two is where the rubber meets the road: pilot implementation. I always recommend starting with non-production workloads in a single region. This gives you hands-on experience with vWAN's behavior and lets you validate your monitoring and operational procedures without risking production services.

The production migration itself requires careful orchestration. We typically plan for brief maintenance windows and always maintain fallback procedures. The actual cutover involves redirecting connections from traditional hubs to vWAN hubs, updating DNS configurations, and validating connectivity across all workloads.

What consistently surprises clients is how much simpler ongoing operations become after the migration. Tasks that previously required multiple configuration changes across different systems now often involve single policy updates in the vWAN management interface.

Monitoring and Performance in Practice

One area where vWAN really shines is observability. The integration with Azure Monitor provides visibility into connection health, traffic patterns, and performance metrics that would require significant custom instrumentation in traditional architectures.

After working with these monitoring capabilities across multiple deployments, I focus on several key metrics: hub-to-hub latency should typically stay under 50ms within region and under 150ms for cross-region communication. Gateway utilization becomes critical—keep it under 80% for optimal performance. And firewall processing capacity and rule hit rates help identify both security issues and performance bottlenecks.

The traffic analytics capabilities have been particularly valuable for optimizing routing and identifying unexpected traffic patterns that might indicate security issues or misconfigured applications.

The Limitations Nobody Talks About

Virtual WAN isn't perfect, and there are scenarios where traditional architectures still make more sense. Complex routing requirements sometimes need more flexibility than vWAN's managed approach provides. Some advanced networking features that work in traditional hub-and-spoke architectures aren't supported in vWAN environments.

Migration downtime is another reality to consider. While most transitions can be managed with minimal disruption, moving critical production workloads from one networking architecture to another inherently involves some risk and usually requires brief service interruptions.

Where This All Leads

After eighteen months of real-world vWAN experience, I find myself recommending it more often than not for enterprise clients. The operational simplification alone justifies the cost in most scenarios, and the security and performance benefits are genuine.

But what's really interesting is how vWAN changes the conversation about network architecture. Instead of spending time on low-level configuration management, we can focus on higher-level design decisions about security policies, traffic optimization, and business requirements. That shift in focus has been more valuable than I initially expected.

The technology landscape keeps evolving, and networking architectures need to evolve with it. Virtual WAN represents a meaningful step toward more manageable, scalable cloud networking. For organizations ready to embrace managed services and prioritize operational efficiency over marginal cost savings, it's worth serious consideration.

The key is honest assessment of your requirements, realistic planning for migration complexity, and commitment to learning new operational patterns. Get those right, and vWAN can genuinely simplify your network operations while improving security and performance posture.