by Soontaek Lim

Share

by Soontaek Lim

What is Spark?

Apache Spark is a unified computing engine accompanied by a suite of libraries designed for parallel data processing within a cluster environment. As the most actively developed open-source engine for parallel processing, Spark is swiftly emerging as a standard tool for developers and data scientists involved in big data tasks. It boasts compatibility with popular programming languages such as Python, Java, Scala, and R, offering an extensive range of libraries covering diverse functionalities, from SQL to streaming and machine learning. Spark is versatile, capable of operating in various environments, ranging from a single laptop to expansive clusters comprising thousands of servers. Leveraging these features, users can effortlessly initiate big data processing and seamlessly scale operations from modest setups to extensive clusters.

How to optimize Spark

01 – Avoid Shuffling as Much as Possible

What is Shuffling?

Shuffling is a crucial operation in the context of distributed data processing, and it plays a significant role in Apache Spark when dealing with large datasets distributed across multiple nodes in a cluster. Shuffling involves redistributing and reorganizing data across the cluster, typically as a result of transformations or operations that require data to be grouped or aggregated in a way that it wasn’t previously.

Shuffling can be an expensive operation in terms of time and network bandwidth, as it involves data movement and coordination between nodes. Minimizing shuffling is often a performance optimization goal when developing Spark applications. Strategies such as choosing appropriate partitioning methods, using broadcast variables, and optimizing the execution plan can help mitigate the impact of shuffling on the overall performance of Spark jobs.

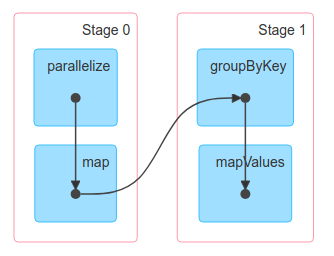

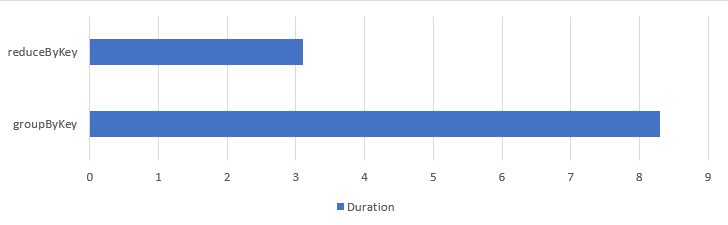

In situations where the problem can be resolved using reduceByKey, you should always use reduceByKey. When using groupByKey, it inevitably causes the most undesirable (but sometimes unavoidable) data shuffling across all nodes in Spark. While data shuffling still occurs when using reduceByKey, the significant difference between the two functions lies in the fact that reduceByKey performs the reduce operation before shuffling, drastically reducing the amount of data transmitted over the network. Therefore, it is advisable to consider functions that reduce data size before shuffling, such as reduceByKey or aggregateByKey, whenever possible. Even for the same wide transformation function, there can be a substantial difference in performance.

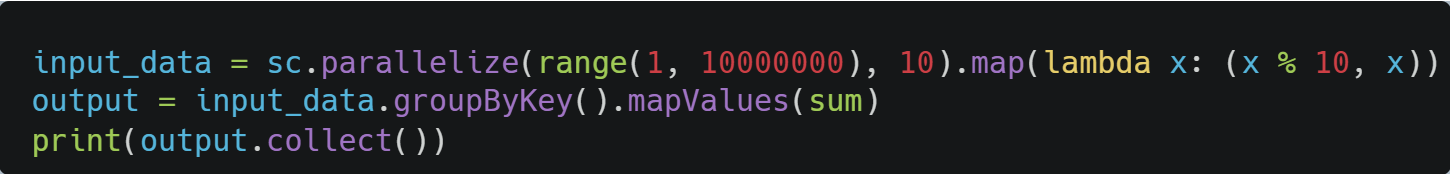

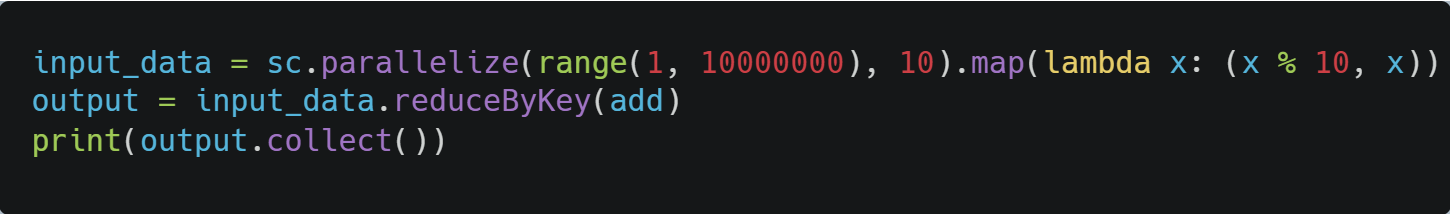

The following is an example comparing the performance of ‘groupByKey’ and ‘reduceByKey’:

Using groupByKey:

Using reduceByKey:

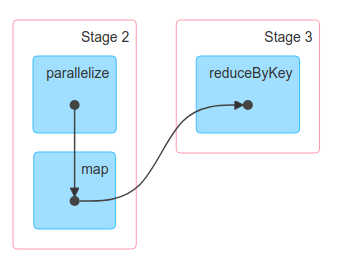

DAG groupByKey vs reduceByKey:

Comparing the total processing time:

02 – Partitioning

In parallel environments like a Spark cluster, it is crucial to appropriately partition the data. This ensures that each executor node stays active and productive. If poorly partitioned data is used for processing, it can lead to a situation where specific nodes bear a disproportionate workload. This is referred to as data skew. In situations where the programmer has control, they can adjust the number of partitions using functions like ‘coalesce’ or ‘repartition’. However, there are scenarios beyond the programmer’s control, such as during operations like ‘join’ that imply shuffling. In such cases, the number of partitions is determined by the Spark configuration parameter ‘spark.sql.shuffle.partitions’. Therefore, for jobs involving frequent join operations, adjusting this configuration value beforehand helps maintain an appropriate number of partitions.

It’s important to note the distinction between ‘coalesce’ and ‘repartition’. ‘repartition’ induces shuffling because it evenly redistributes the entire dataset among nodes. This is inevitable since the essence of repartitioning is to achieve uniform distribution across nodes. On the other hand, using the ‘coalesce’ function allows data distribution without triggering shuffling, albeit with the constraint that the number of partitions cannot be increased.

03 – Use the Right Data Structures

Starting from Spark 2.x, it is recommended to use the Dataset API. While the underlying structure of Datasets is still RDD, it incorporates various optimizations, such as Spark Catalyst optimization, and a much more powerful interface. For example, when performing time-consuming join operations using the High-level API, optimizations can automatically switch to techniques like Broadcast join, minimizing shuffling when possible. Therefore, it is advisable to make an effort to use Datasets or DataFrames whenever possible.

04 – Use Broadcast Variables

Broadcast variables in Apache Spark are used to efficiently share read-only variables across the nodes in a Spark cluster. Instead of sending a copy of the variable to each task, which can be resource-intensive and inefficient, broadcast variables allow the variable to be cached on each machine and shared among the tasks that run on that machine. This can significantly improve the performance of certain Spark operations by reducing the amount of data that needs to be transferred over the network.

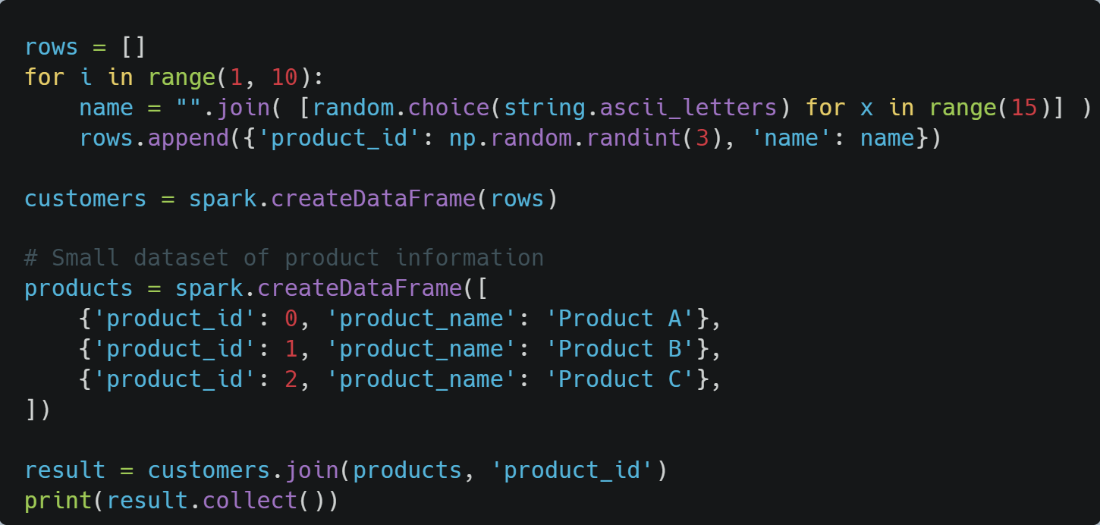

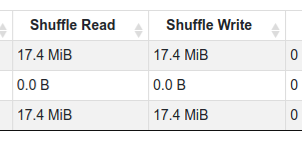

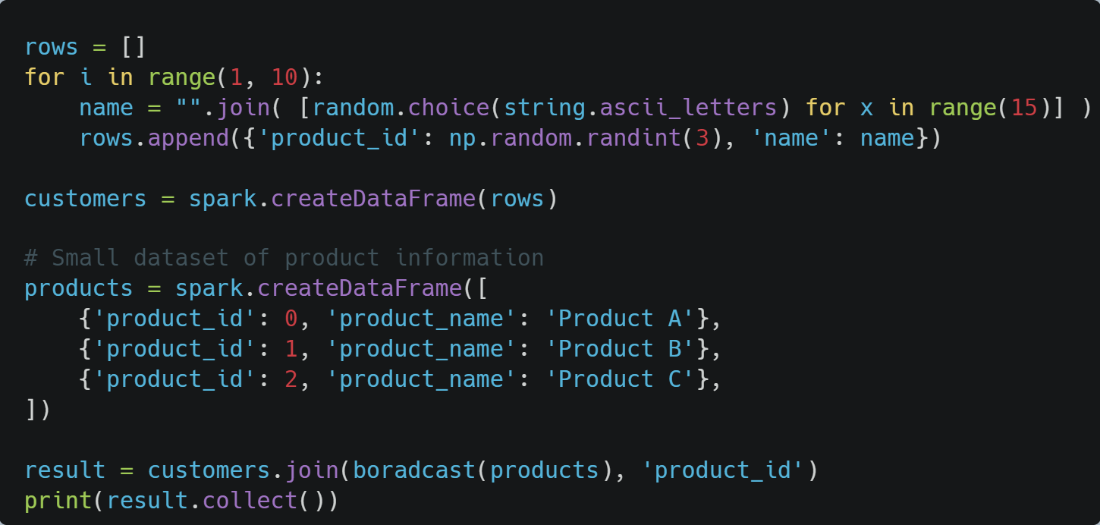

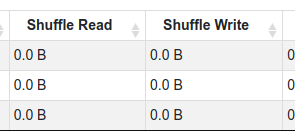

Below is an example of joining two dataframes. The first one is without using broadcast, and the second one is using broadcast.

Joining two dataframe without broadcasting:

Joining two dataframe with broadcasting:

When you execute both examples, the most significant difference you’ll notice is that the one using broadcast doesn’t involve shuffling. In Spark, shuffling is a costly operation. By using broadcast, unnecessary shuffling is avoided, reducing network usage. In Spark, where handling large-scale data is common, minimizing such unnecessary network usage can greatly enhance performance.

05 – Persist Intermediate Data

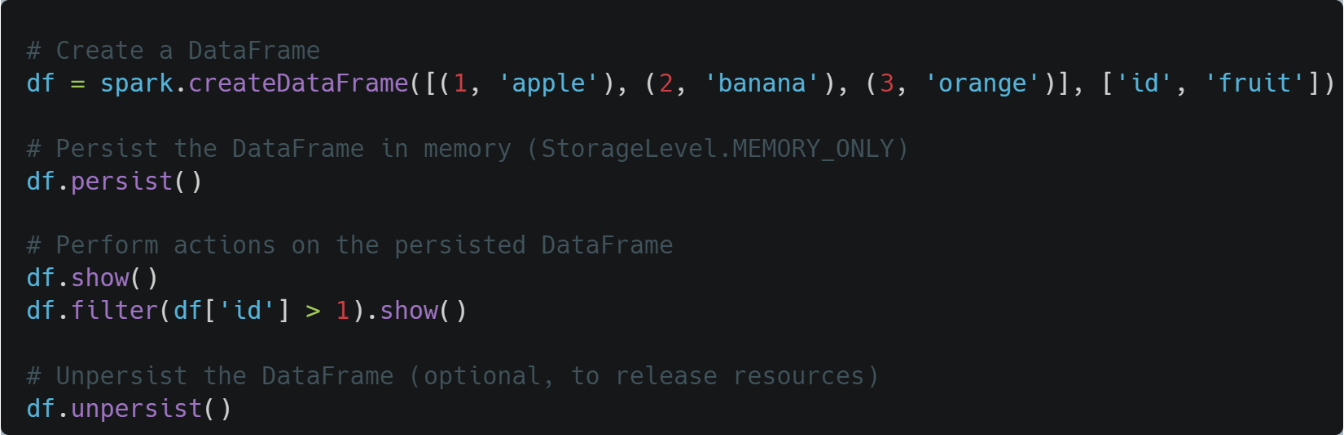

In Apache Spark, persist() and cache() are methods used to persist or cache a DataFrame, RDD (Resilient Distributed Dataset), or Dataset in memory or on disk. These methods are particularly useful to improve the performance of iterative or interactive Spark workloads by avoiding the need to recompute the data each time it is accessed.

Especially, Iterative machine learning algorithms, common in Spark’s MLlib, benefit significantly from caching or persisting. These algorithms repeatedly access and update the same data during iterations, making caching essential for efficiency.

Also, when data is persisted, Spark stores it in a fault-tolerant manner. This ensures that in case of a node failure during computation, the lost data can be recomputed from the original source or intermediate steps, maintaining data integrity.

In this example, the persist() method is used to cache the DataFrame in memory. Subsequent actions on the DataFrame can benefit from the cached data, improving performance. Keep in mind that you should choose an appropriate storage level based on your specific use case and available resources.