by Soontaek Lim

Share

by Soontaek Lim

Training Large Language Models (LLMs) requires significant amounts of memory and computing power. For instance, to pre-train a LLaMA 7B model from scratch, a single batch size requires at least 58GB of memory. One method that has emerged to mitigate these memory issues is Low-rank Adaptation (LoRA). This approach adds trained low-rank matrices to each layer, thereby reducing the number of parameters. However, this method can limit parameter search within low-rank subspaces, alter training dynamics, and may even necessitate a full-rank warm start, potentially leading to inferior performance compared to training with full-rank weights.

GaLore: Gradient Low-Rank Projection

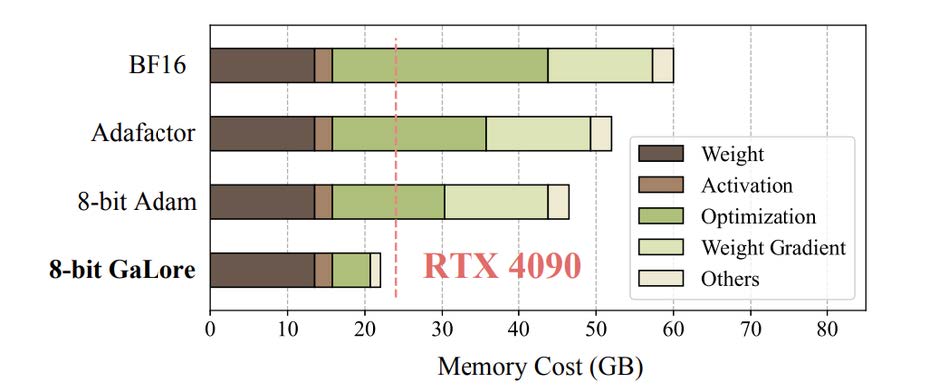

Gradient Low-Rank Projection (GaLore) presents a training strategy that enables full-parameter learning with greater memory efficiency than conventional low-rank adaptation methods, such as LoRA. GaLore achieves up to a 65.5% reduction in memory usage within optimizer states, thus preserving efficiency and performance throughout the pre-training on LLaMA 1B and 7B architectures, as well as during the fine-tuning of GLUE tasks on RoBERTa. Notably, 8-bit GaLore further diminishes optimizer memory by as much as 82.5% and overall training memory by 63.3% when compared to the BF16 standard. Specifically, it opens up the unprecedented possibility of pre-training a 7B model on consumer-grade GPUs, such as the NVIDIA RTX 4090, without necessitating model parallel checkpointing or offloading strategies.

Memory consumption of pre-training a LLaMA 7B model with a token batch size of 256 on a single device, without activation checkpointing and memory offloading.

GaLore allows for full-parameter learning to overcome the limitations shown by LoRA, while being significantly more memory-efficient than conventional low-rank adaptation methods. The key idea is to leverage the slowly changing low-rank structure of the gradient G of the weight matrix W, rather than attempting to approximate the weight matrix itself as low-rank.

Learning through low-rank subspaces ΔWT1and ΔWT2 using GaLore

Weight Update Formula: The weight W_t at a given training step t is updated according to the formula:

Subspace Switching: The model dynamically switches across low-rank subspaces during training. The selection of a subspace is based on a schedule determined by , where T_i denotes the number of updates within the i-th subspace.

The trajectory of through multiple low-rank subspaces is illustrated in a figure. This approach of allowing the model to traverse multiple

low-rank subspaces is crucial for successful pre-training of LLMs. This technique is a sophisticated approach to optimizing the training of large neural networks. By carefully navigating through different subspaces, the model can more efficiently explore the weight space, potentially leading to better generalization and performance. This method acknowledges the complexity of the weight space of LLMs and leverages mathematical tools like SVD to make training more effective and efficient.

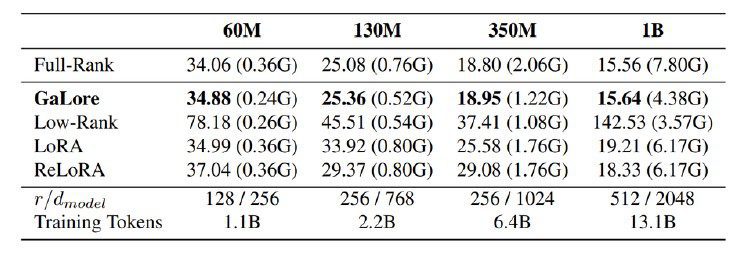

Comparison with low-rank algorithms on pre-training various sizes of LLaMA models on C4 dataset. Validation perplexity is reported, along with a memory estimate of the total of parameters and optimizer states based on BF16 format.

This table demonstrates GaLore’s memory efficiency through experimental results. The experimental setup is outlined as follows:

- For GaLore, the subspace frequency T is fixed at 200, with a scale factor α of 0.25 applied across all model sizes mentioned in the table.

- A consistent rank r is selected for all low-rank methods for each model size, and these methods are applied to all multi-head attention

and feed-forward layers within the models. - Training is conducted using the Adam optimizer, following its default hyperparameters.

- Memory usage is estimated based on the BF16 format, accounting for the memory required for weight parameters and the optimizer

state.

The table reveals that GaLore surpasses other sub-ranking methods, achieving performance comparable to that of full-rank training.

Specifically, for a 1B model size, GaLore exceeds the full-rank baseline performance when using ‘r = 1024’ instead of ‘r = 512’. Furthermore,

in comparison to LoRA and ReLoRA, GaLore demands less memory for storing model parameters and the state of the optimizer.

Conclusion

GaLore significantly reduces memory usage during the pre-training and fine-tuning of Large Language Models (LLMs) while maintaining performance. This indicates a decreased dependence on large computing systems and suggests the potential for substantial cost savings. However, the paper acknowledges that GaLore still faces unresolved issues: applying it to different types of training such as vision transformers and diffusion models, further enhancing memory efficiency through quantization and special parameterization, and exploring the potential for elastic data distributed training on consumer-grade hardware.

Despite these unresolved issues, GaLore facilitates the training of LLMs using consumer-grade hardware, enabling broader participation. This increased accessibility could accelerate the advancement of LLM research and, with more community involvement, it is hoped that GaLore will overcome its current challenges and evolve into a valuable tool for the LLM community.

Reference:

GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection (arxiv.org)